Claudio Coppola

Robotics And Machine Learning Scientist

Senior Research Engineer @ Thehumanoid.ai

Biography

Claudio Coppola is an artificial intelligence and robotics expert with strong academic credentials and industry experience. He specializes in applying advanced machine learning techniques to enable more intelligent robot systems.

Claudio obtained his PhD in Robotics from the University of Lincoln in 2018, where his doctoral research focused on human activity understanding to assist robots in indoor environments. He also holds a Master’s degree cum laude in Computer Engineering from the University of Naples Federico II in Italy. His work has led to numerous publications in leading journals and conferences like ICRA, IROS and IJSR.

During his experience as Applied Scientist at Amazon Transportation Services. Claudio has developed cutting-edge machine learning solutions for forecasting and pricing optimization. Previously at Queen Mary University of London, he researched robot learning for manufacturing automation. His work on tactile perception, teleoperation and learning from demonstrations aimed to enable adaptable industrial robots. With his technical expertise and passion for AI robotics, Claudio is an asset in initiatives fusing intelligent systems with automation.

- Robot-learning

- Human-Activity-Recognition

- Machine-Learning

- Time Series Forecasting

- Robotic-Perception

PhD in Robotics, 2018

University of Lincoln

MSc in Computer Science Engineering, 2013

Universitá degli studi di Napoli Federico II

BSc in Computer Science Engineering, 2011

Universitá degli studi di Napoli Federico II

Experience

Lead Instructor for the AI and Data Science courses.

- Delivered the Course material to professionals interested in changing their career towards data oriented and AI-dependant roles.

- Advised students on their final project, objectives, and data choices.

- Performed reseach and supported PhD and Master students as member of the Advanced Robotics Queen-Mary (ARQ) Team.

- Contributed to and Coordinated the work of PhD/MSc students for the EPSRC MAN3 Project, involving Shadow Robotics, Ocado and Google Deepmind.

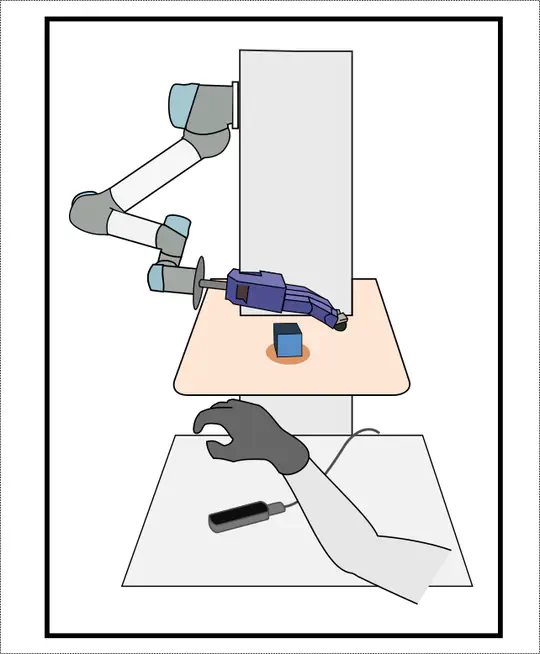

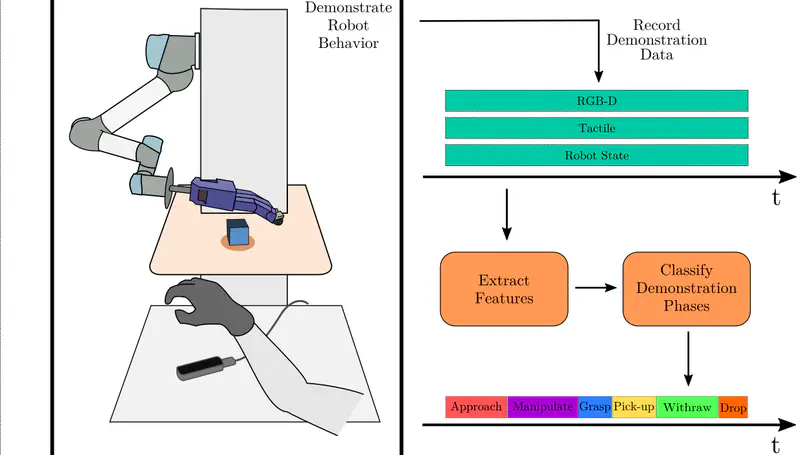

- Conducted research on learning by demonstration for robot manipulation by building a teleoperation platform and a demonstration segmentation system.

- Led the data science team, worked on several Machine Learning Projects central to raise $8.9M funding for the company to improve the analytics product

- Object Detection in Social Media Images. This project helped to provide analytics about influencer topics to the company platform.

- Fake Influencer Detection. This project tried to detect fake accounts and accounts using fake influencers to boost their metrics.

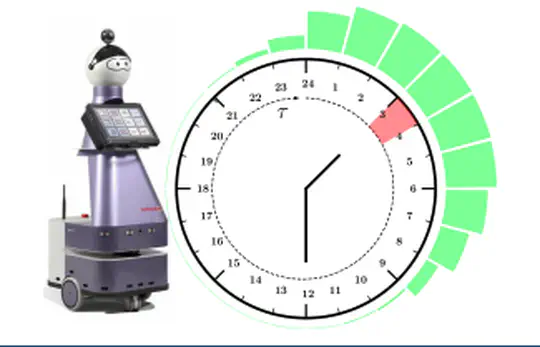

- Developed state-of-the-art Human Activity Recognition and Re-identification models used in the EU H2020 research projects ENRICHME and FLOBOT.

- Teaching Assistant for courses of Artificial Intelligence and Robotics.

- Automated daily BI maintenance tasks with a speed-up above 90% and developed SQL queries to generate BI reports.

Accomplishments

Recent Posts

Projects

Featured Publications

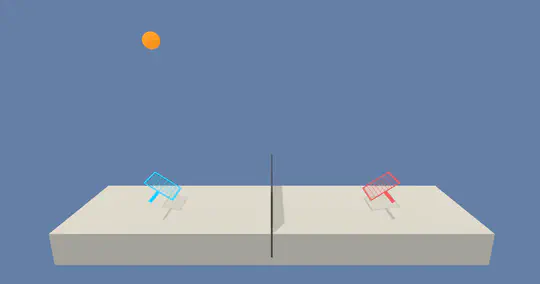

The paper presents a user study characterizing the effect of vibrotactile feedback on performance and cognitive load during teleoperated robotic grasping and manipulation using an affordable bilateral system with a Leap Motion and vibrotactile glove controlling a sensorized robot hand, showing vibrotactile feedback improves teleoperation and reduces cognitive load especially for complex in-hand manipulation tasks.

The paper proposes a method using motion and tactile features to automatically segment atomic actions from teleoperated demonstrations for complex robotic tasks, provides a dataset of pick-and-place teleoperation with a dexterous hand, and shows the proposed features generalize between episodes and similar objects while tactile sensing improves activity recognition within demonstrations.

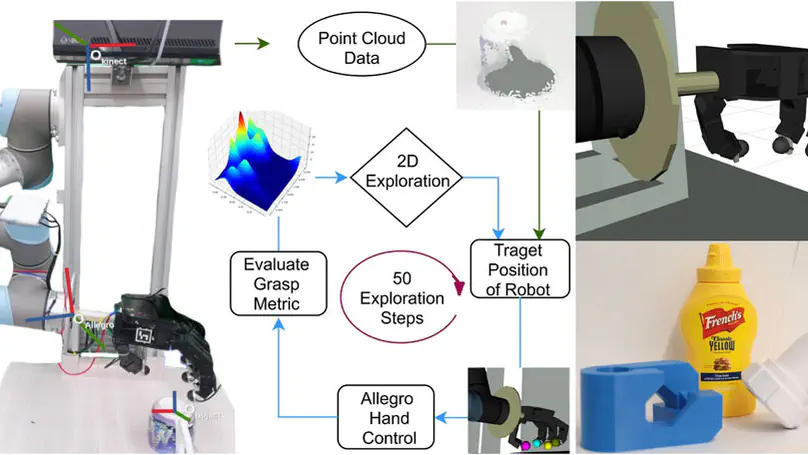

The paper presents an approach using depth sensing and tactile feedback to predict safe robotic grasps of completely unknown objects through haptic exploration and comparing grid search, standard Bayesian Optimization, and unscented Bayesian Optimization, with results showing unscented Bayesian Optimization provides higher confidence grasps with fewer exploratory observations.

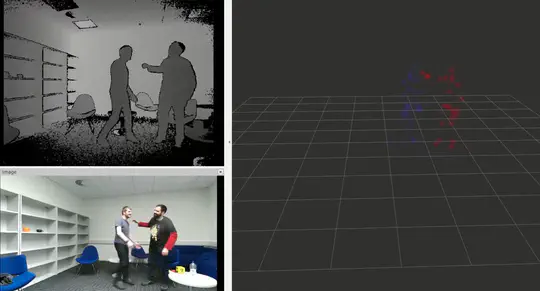

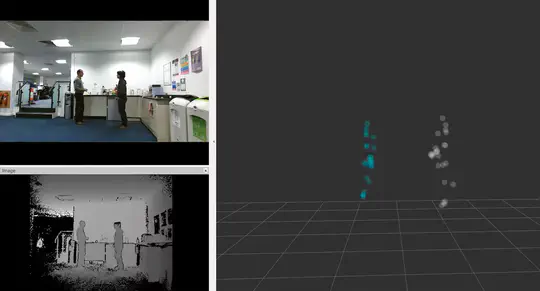

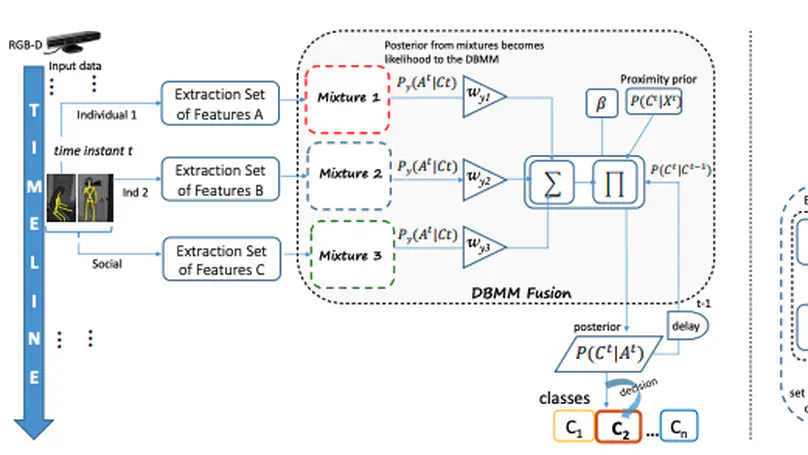

The paper presents a probabilistic approach using spatio-temporal and social features to recognize human-human interaction activities, learns priors based on proxemics theory to improve classification, and provides a new public RGB-D dataset of social activities including risk situations, with results showing the proposed method merging different features and proximity priors improves precision, recall and accuracy over alternative approaches

Recent Publications

Popular Topics

Contact

Hi Feel Free to send me an email!